Multi-Model Switching

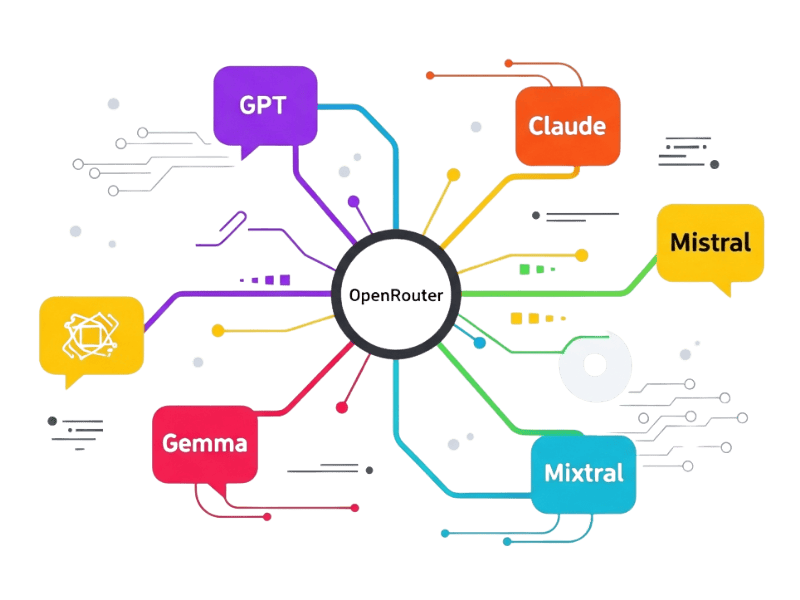

Choose from GPT, Claude, Mistral, Gemma, and more through one dynamic API.

Learn MoreSwitch between top AI models dynamically and cost-effectively, all from a single, unified integration.

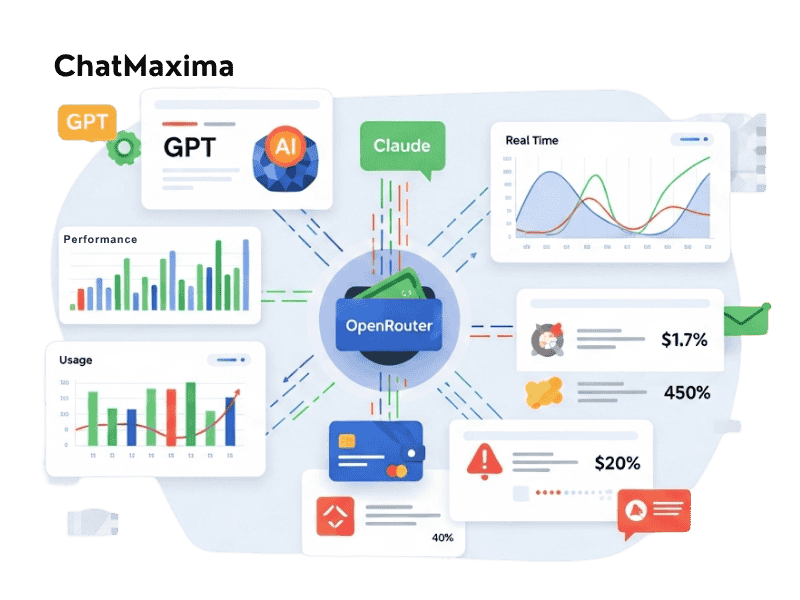

Discover the cutting-edge features that make ChatMaxima + OpenRouter integration the ultimate tool for streamlined workflows. Empower your team to harness advanced AI functionality for seamless communication and decision-making.

Choose from GPT, Claude, Mistral, Gemma, and more through one dynamic API.

Learn More

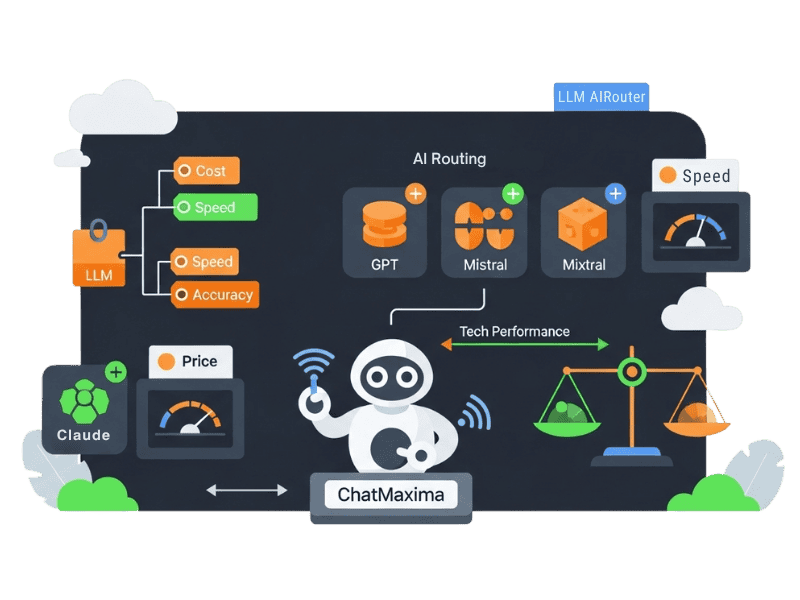

Balance response quality and pricing by routing to the right model per request.

Learn More

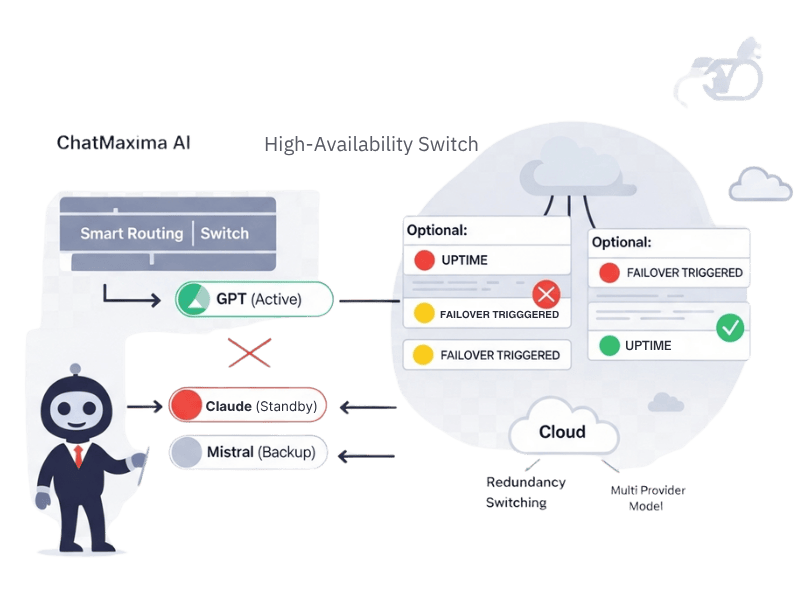

Maintain uptime by dynamically shifting to backup models during downtime.

Learn More

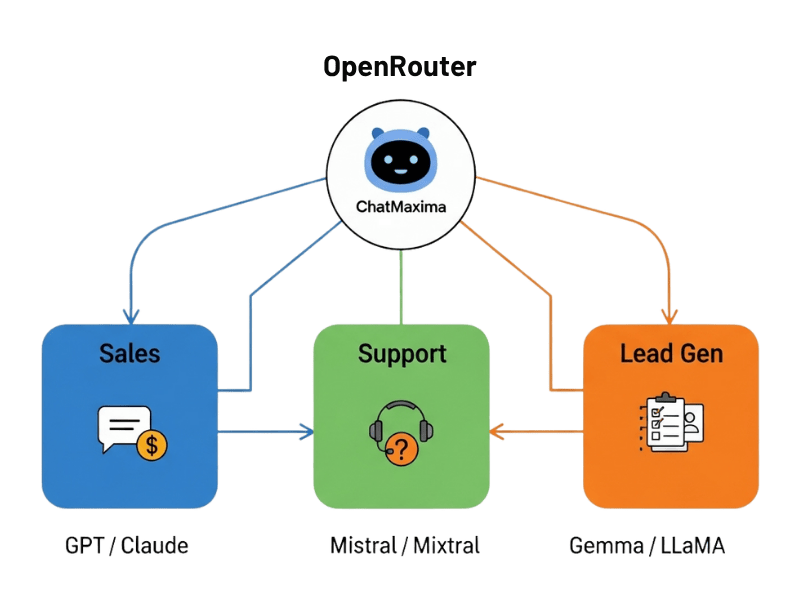

Assign different providers to sales, support, or lead gen bots for specialization.

Learn More

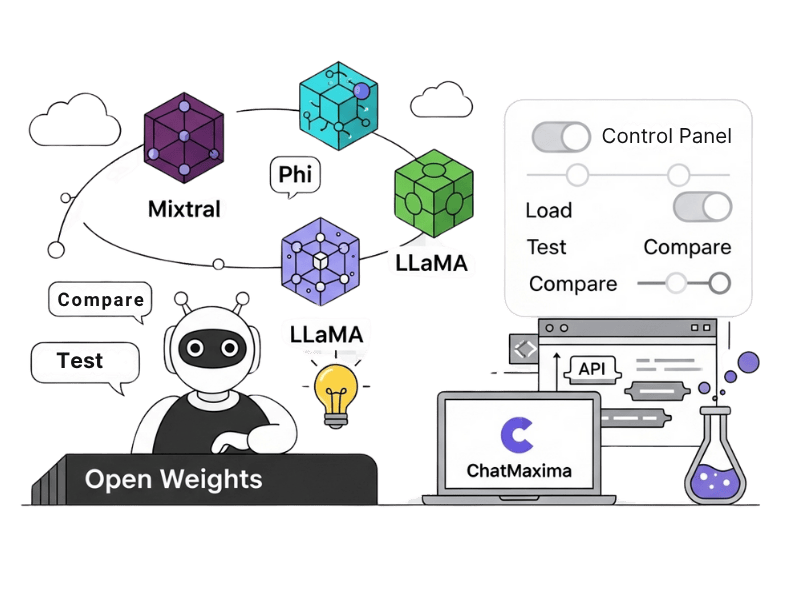

Experiment with models like Mixtral, Phi, or LLaMA without additional infrastructure.

Learn More

Track usage, cost, and performance centrally with one dashboard.

Learn More

Integrating ChatMaxima with OpenRouter unlocks limitless possibilities for enhancing AI-driven insights, refining workflows, and automating tasks across diverse industries.

DeepSeek-R1-0528 is a lightly upgraded release of DeepSeek R1 that taps more compute and smarter post-training tricks, pushing its reasoning and inference to the brink of flagship models like O3 and Gemini 2.5 Pro. It now tops math, programming, and logic leaderboards, showcasing a step-change in depth-of-thought. The distilled variant, DeepSeek-R1-0528-Qwen3-8B, transfers this chain-of-thought into an 8 B-parameter form, beating standard Qwen3 8B by +10 pp and tying the 235 B “thinking” giant on AIME 2024.

May 28th update to the [original DeepSeek R1](/deepseek-r1) Performance on par with [OpenAI o1](/openai/o1), but open-sourced and with fully open reasoning tokens. It's 671B parameters in size, with 37B active in an inference pass. Fully open-source model.

Sarvam-M is a 24 B-parameter, instruction-tuned derivative of Mistral-Small-3.1-24B-Base-2503, post-trained on English plus eleven major Indic languages (bn, hi, kn, gu, mr, ml, or, pa, ta, te). The model introduces a dual-mode interface: “non-think” for low-latency chat and a optional “think” phase that exposes chain-of-thought tokens for more demanding reasoning, math, and coding tasks. Benchmark reports show solid gains versus similarly sized open models on Indic-language QA, GSM-8K math, and SWE-Bench coding, making Sarvam-M a practical general-purpose choice for multilingual conversational agents as well as analytical workloads that mix English, native Indic scripts, or romanized text.

Devstral-Small-2505 is a 24B parameter agentic LLM fine-tuned from Mistral-Small-3.1, jointly developed by Mistral AI and All Hands AI for advanced software engineering tasks. It is optimized for codebase exploration, multi-file editing, and integration into coding agents, achieving state-of-the-art results on SWE-Bench Verified (46.8%). Devstral supports a 128k context window and uses a custom Tekken tokenizer. It is text-only, with the vision encoder removed, and is suitable for local deployment on high-end consumer hardware (e.g., RTX 4090, 32GB RAM Macs). Devstral is best used in agentic workflows via the OpenHands scaffold and is compatible with inference frameworks like vLLM, Transformers, and Ollama. It is released under the Apache 2.0 license.

Gemma 3n E4B-it is optimized for efficient execution on mobile and low-resource devices, such as phones, laptops, and tablets. It supports multimodal inputs—including text, visual data, and audio—enabling diverse tasks such as text generation, speech recognition, translation, and image analysis. Leveraging innovations like Per-Layer Embedding (PLE) caching and the MatFormer architecture, Gemma 3n dynamically manages memory usage and computational load by selectively activating model parameters, significantly reducing runtime resource requirements. This model supports a wide linguistic range (trained in over 140 languages) and features a flexible 32K token context window. Gemma 3n can selectively load parameters, optimizing memory and computational efficiency based on the task or device capabilities, making it well-suited for privacy-focused, offline-capable applications and on-device AI solutions. [Read more in the blog post](https://developers.googleblog.com/en/introducing-gemma-3n/)

Explore how businesses across various sectors use ChatMaxima + OpenRouter integration to leverage AI-driven analytics, boost engagement, and drive innovation forward.

Switch seamlessly between GPT, Claude, Mistral, and more from a single API within ChatMaxima.

Route low-risk queries to efficient models and escalate complex ones smartly.

Test new models rapidly before scaling bot deployments.

Ensure bot uptime by rerouting across fallback models in real time.

No technical expertise required! ChatMaxima makes deploying OpenRouter’s capabilities straightforward with simple tools and intuitive interfacing.

Log in to ChatMaxima, navigate to the Integrations section, and click “Add Integration.” Select "OpenRouter" as the platform, provide a name for the integration, and enter your API key to securely link your OpenRouter account.

Open ChatMaxima Studio and click “Create Bot” . Use the drag-and-drop builder and choose 'MaxIA – AI Assistant' as your engine to begin creating your AI-powered chatbot.

Select "OpenRouter" as your LLM provider, then choose a model based on the selected LLM type. Configure system prompts and integrate knowledge sources to design intelligent, dynamic, and context-aware chat experiences.

Integrating ChatMaxima with OpenRouter unlocks immense potential, but other AI models can complement based on specific requirements.

Industry leader in conversational AI, powering GPT-4, GPT-4o, and GPT-3.5. Seamlessly integrates with ChatMaxima to create intelligent, natural-sounding chatbots, AI assistants, and voice agents with strong reasoning and creativity.

Makers of Claude models, known for ethical alignment and long-context handling. Excellent for building trust-centric bots in sensitive domains (health, finance, HR) via ChatMaxima with nuanced and safe interactions.

Google’s multimodal AI family — including Gemini Flash and Pro — supports image, voice, and long-text processing. Enhances ChatMaxima bots with deep reasoning, rich context understanding, and token-extensive memory.

Global businesses harness the synergy of ChatMaxima + OpenRouter to drive innovation, transform workflows, and deliver customer engagement powered by advanced AI tools.

Redefine connectivity and network management with OpenRouter integration. From automating routing tasks to enhancing scalability and performance, OpenRouter combined with ChatMaxima offers the tools your business needs to thrive.

We know exploring OpenRouter AI models can come with queries. Here are the commonly asked questions about ChatMaxima + OpenRouter integration. For more details, our support team is just a message away!

No, OpenRouter AI models are designed with user-centric features, making them accessible to users of all proficiency levels.

Yes, OpenRouter excels at managing and optimizing distributed systems, ensuring seamless communications across complex networks.

OpenRouter implements advanced security frameworks, including data encryption and intrusion detection, to safeguard your systems.

Absolutely. With AI-powered automation, OpenRouter simplifies complex routing processes, saving time and reducing errors.

Yes, OpenRouter seamlessly integrates with hybrid cloud and on-premises infrastructures, promoting flexibility across platforms.

Yes, OpenRouter AI models provide detailed real-time analytics to help monitor and optimize network performance effectively.

Industries like telecommunications, logistics, and IT services can benefit significantly from OpenRouter’s advanced routing and network optimization capabilities.

Certainly. OpenRouter AI is equipped with predictive analytics to identify potential network problems and offer proactive resolutions.

Yes, OpenRouter is highly scalable and can be optimized for both small-scale networks and enterprise-level infrastructures alike.

OpenRouter has robust recovery protocols and diagnostic tools to minimize impacts and ensure rapid resumption of operations.