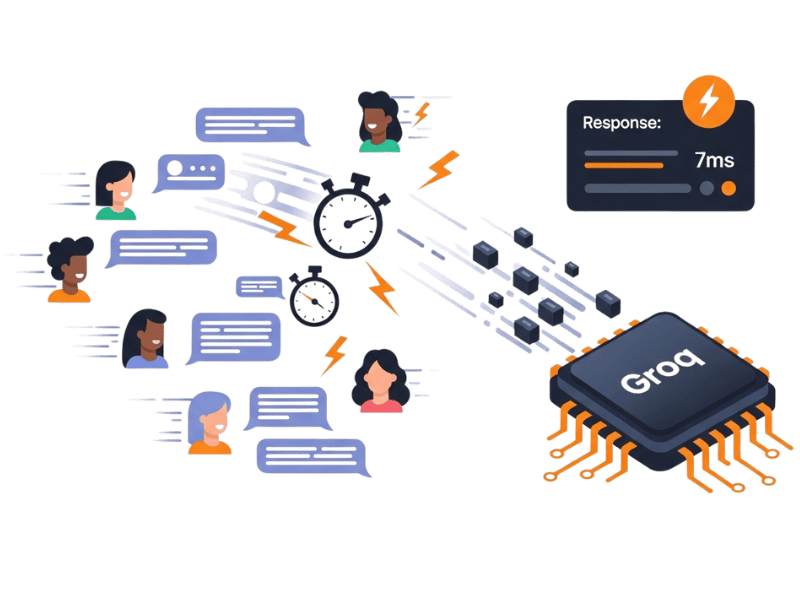

Lightning-Fast Response Time

Handle real-time conversations with industry-leading low-latency inference optimized for speed.

Learn MoreDeliver blazing-fast customer interactions using Groq’s low-latency AI infrastructure — ideal for high-volume, real-time workflows.

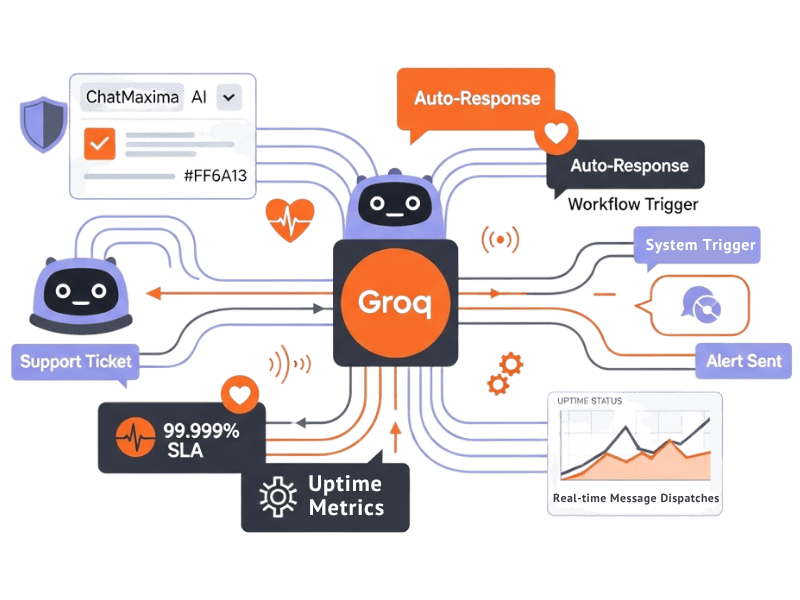

Discover the cutting-edge features that make ChatMaxima + Groq integration the ultimate tool for streamlined workflows. Empower your team to harness advanced AI functionality for seamless communication and decision-making.

Handle real-time conversations with industry-leading low-latency inference optimized for speed.

Learn More

Scale your bots to handle thousands of concurrent queries without lag or degradation.

Learn More

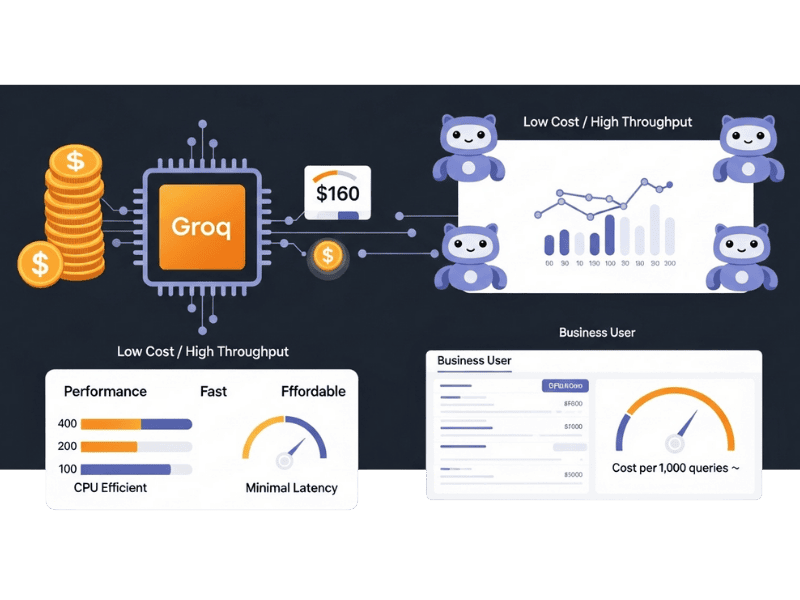

Reduce AI usage costs at scale without compromising performance or responsiveness.

Learn More

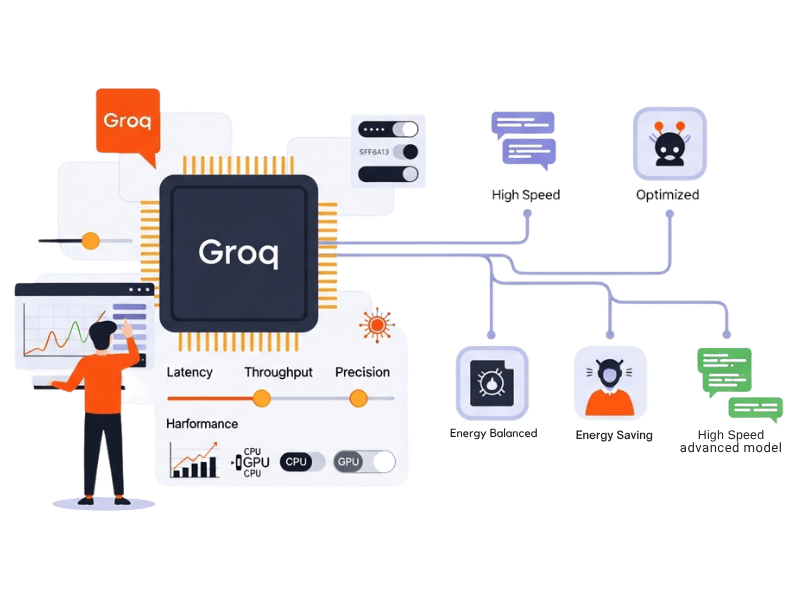

Adapt your AI processing stack by controlling how models execute via Groq’s hardware tuning.

Learn More

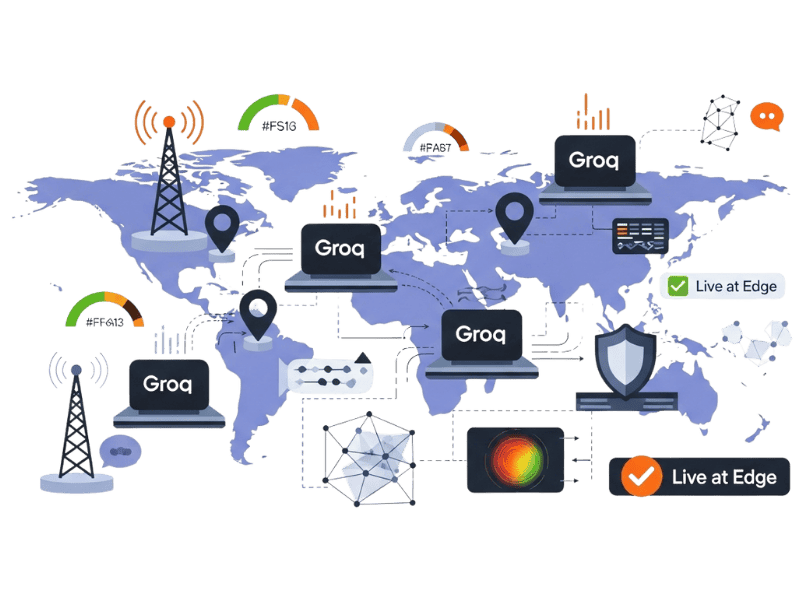

Run ChatMaxima-powered bots on high-speed edge servers using Groq hardware acceleration.

Learn More

Ideal for high-traffic, mission-critical workflows in support, automation, and alerts.

Learn More

Integrating ChatMaxima with Groq unlocks limitless possibilities for enhancing AI-driven insights, refining workflows, and automating tasks across diverse industries.

Groq Compound is a high-performance compound AI system running on Groq's LPU infrastructure, combining multiple model components for accelerated reasoning and generation throughput.

Groq Compound Mini is a streamlined compound AI system on Groq's LPU hardware, offering efficient multi-component reasoning with fast throughput for lightweight AI workflows.

Llama 3.3 70B Versatile by Meta is a high-capacity general-purpose language model offering strong performance across reasoning, coding, summarization, and multilingual NLP tasks.

Llama 3.1 8B Instant by Meta delivers fast, accurate text generation with an 8-billion-parameter architecture, optimized for rapid deployment and low-latency conversational applications.

OpenAI GPT-OSS 120B is a 120-billion-parameter open-source language model offering near-frontier reasoning and generation capabilities for research and enterprise AI integration.

OpenAI GPT-OSS 20B is a 20-billion-parameter open-source language model providing strong instruction-following and reasoning capabilities for cost-efficient enterprise AI development.

Orpheus V1 English by Canopy Labs is an expressive English text-to-speech model delivering highly natural, human-like voice synthesis with nuanced emotional and prosodic variation.

Orpheus Arabic Saudi by Canopy Labs is a specialized text-to-speech model delivering natural Saudi Arabic voice synthesis with authentic dialect, prosody, and cultural expressiveness.

Explore how businesses across various sectors use ChatMaxima + Groq integration to leverage AI-driven analytics, boost engagement, and drive innovation forward.

Deliver instant replies for high-traffic bots handling FAQs, support tickets, or transaction queries.

Power intelligent message routing at lightning speeds across workflows using low-latency inference.

Deploy bots that handle large outbound campaigns without compromising performance.

Parse incoming messages in real time to trigger workflows or detect sentiment instantly.

No technical expertise required! ChatMaxima makes deploying Groq capabilities straightforward with simple tools and intuitive interfacing.

Log in to ChatMaxima, navigate to the Integrations section, and click “Add Integration.” Select "Groq" as the platform, provide a name for the integration, and enter your API key to securely link your Groq account.

Open ChatMaxima Studio and click “Create Bot” . Use the drag-and-drop builder and choose 'MaxIA – AI Assistant' as your engine to begin creating your AI-powered chatbot.

Select "Groq" as your LLM provider, then choose a model based on the selected LLM type. Configure system prompts and integrate knowledge sources to design intelligent, dynamic, and context-aware chat experiences.

Integrating ChatMaxima with Groq unlocks immense potential, but other AI models can complement based on specific requirements.

Industry leader in conversational AI, powering GPT-4, GPT-4o, and GPT-3.5. Seamlessly integrates with ChatMaxima to create intelligent, natural-sounding chatbots, AI assistants, and voice agents with strong reasoning and creativity.

Makers of Claude models, known for ethical alignment and long-context handling. Excellent for building trust-centric bots in sensitive domains (health, finance, HR) via ChatMaxima with nuanced and safe interactions.

Google’s multimodal AI family — including Gemini Flash and Pro — supports image, voice, and long-text processing. Enhances ChatMaxima bots with deep reasoning, rich context understanding, and token-extensive memory.

Global businesses harness the synergy of ChatMaxima + Groq to drive innovation, transform workflows, and deliver customer engagement powered by advanced AI tools.

Unlock unparalleled AI capabilities with Groq integration. Whether you're looking to accelerate data processing, automate workflows, or optimize performance, Groq combined with ChatMaxima paves the way for smarter operations and innovative solutions.

We know exploring Groq AI models can come with queries. Here are the commonly asked questions about ChatMaxima + Groq integration. For more details, our support team is just a message away!

No, Groq models are designed with user-friendly interfaces and simplified workflows, making them accessible even for users without prior AI experience.

Yes, Groq models leverage high-performance computing capabilities, allowing seamless scaling for small to large-scale operations, tailored to your workflow needs.

Absolutely. Groq models are optimized for real-time data processing, ensuring quick and efficient decision-making for time-sensitive applications.

Groq integrations prioritize data security with advanced encryption protocols and compliance with industry standards, ensuring your information is always safe.

Yes, Groq models are built to integrate seamlessly across multiple platforms, providing flexibility for diverse business ecosystems.

Certainly. Groq's adaptable architecture allows customization to meet the specific requirements of various industries, from finance to healthcare and beyond.

Groq models excel in handling high-volume datasets with precision and speed, making them ideal for data-intensive tasks and analytical needs.

Groq's versatile AI models benefit industries like healthcare, finance, retail, logistics, and manufacturing, among others, enhancing automation, analytics, and operational efficiency.

Yes, Groq's unique architecture accelerates training and inference processes, helping teams achieve better performance and faster results for machine learning models.

In case of interruptions, Groq systems are equipped with robust fail-safe mechanisms and recovery protocols to ensure minimal disruption to operations.