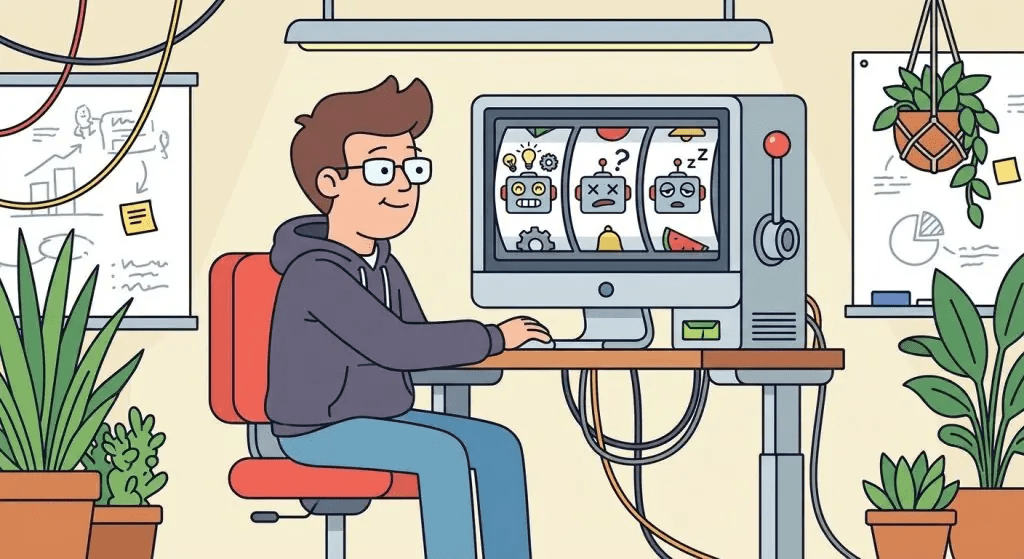

Yesterday, Claude wrote flawless Python code. Today? It forgot how to close a bracket.

Sound familiar?

If you’ve spent any meaningful time working with AI platforms like ChatGPT, Claude, or Gemini, you’ve probably noticed something strange. Some days the output is brilliant, insightful, and exactly what you needed. Other days, the same prompt produces something that makes you wonder if the AI had too much coffee. Or not enough.

When humans underperform, we blame sleep, stress, or Monday mornings. When AI underperforms, users across Reddit, Twitter, and countless Slack channels shout the same thing: “They nerfed it!”

But here’s the thing. AI doesn’t get tired. It doesn’t have bad days. And yet the inconsistency is real. So what’s actually happening?

What “Nerfing” Really Means

The term “nerfing” comes from gaming, where developers deliberately weaken overpowered characters or weapons to balance gameplay. When applied to AI, users believe companies are secretly downgrading their models to cut costs or push users toward premium plans.

The conspiracy is tempting. It feels like an explanation for the unexplainable.

But the reality is both more mundane and more interesting. The inconsistency you experience isn’t a conspiracy. It’s probability.

The Four Real Reasons AI Seems Inconsistent

1. Load Balancing and Model Routing

Here’s something most users don’t realize: on subscription plans, you’re not always talking to the same model.

During peak hours, AI platforms often route requests to smaller, faster models to handle demand. Same interface. Same chat window. Different neural network responding to you. This isn’t deception. It’s infrastructure management. But it explains why your 2 AM coding session might feel sharper than your noon meeting prep.

Some platforms are transparent about this. Others aren’t. Either way, the model answering your question at 10 AM might be fundamentally different from the one at 10 PM.

2. Context Window Fatigue

Every AI model has a context window: the amount of text it can “see” and remember during a conversation. For most modern models, this ranges from 8,000 to 200,000 tokens.

Here’s the problem. As your conversation grows, the AI isn’t getting smarter with more context. It’s getting overwhelmed. Long conversations lead to degraded outputs not because the AI is dumber, but because it’s drowning in information. Important details from early in the conversation get compressed, distorted, or effectively forgotten.

That brilliant assistant from message one? By message fifty, it’s working with a fraction of the original clarity.

3. Temperature and Configuration Changes

Behind every AI response is a parameter called “temperature” that controls randomness. Low temperature means predictable, focused answers. High temperature means creative, varied, sometimes chaotic responses.

Slight configuration changes on the backend can produce wildly different outputs for identical prompts. A temperature shift from 0.7 to 0.9 might be invisible to you but completely change the AI’s behavior. You’ll never know it happened, and the platform has no obligation to tell you.

4. Your Prompts Changed, Not the Model

This is the uncomfortable one.

We blame the AI. But sometimes the problem is us. The way you phrase a question on Tuesday might be subtly different from how you phrased it on Monday. You might provide less context, use different terminology, or approach the problem from another angle.

Small changes in input create large changes in output. That’s not a bug. That’s how language models work.

How to Get Consistent AI Outputs

Understanding the problem is half the battle. Here’s how to solve it.

Keep conversations short and focused. Don’t let context window fatigue degrade your results. For complex tasks, start fresh threads rather than continuing marathon conversations.

Restart for complex tasks. If you need the AI at its best, begin a new conversation. Give it a clean slate without accumulated context baggage.

Save prompts that work. When you get a great result, save that exact prompt. Reuse it. Don’t try to recreate it from memory. Prompts are recipes, and recipes need precise ingredients.

Use API access for mission-critical work. Consumer chat interfaces are convenient but unpredictable. If consistency matters, API access gives you control over model selection, temperature, and other parameters that affect output quality.

Be explicit about what you want. Vague prompts produce vague results. Specific, structured prompts with clear expectations produce consistent outputs across sessions.

The Uncomfortable Truth About AI

We’ve built expectations of perfection for technology that’s fundamentally probabilistic.

Every time you press enter, the AI isn’t looking up an answer from a database. It’s generating a probability distribution over possible next words, sampling from that distribution, and repeating. Millions of times. In seconds.

Most of the time, this process produces something remarkable. Sometimes, it doesn’t.

AI doesn’t have bad days. It has probability distributions. And sometimes, you land on the wrong tail of the curve.

That’s not a flaw to be fixed. It’s the nature of the technology. The sooner we accept this, the better we can work with these tools rather than against them.

What This Means for Your AI Strategy

If you’re building products or workflows around AI, inconsistency isn’t just annoying. It’s a design challenge.

The teams getting the best results aren’t the ones finding “perfect” AI tools. They’re the ones building systems that account for variability: validation layers, human review steps, and fallback mechanisms when AI outputs miss the mark.

Consistency in AI isn’t about finding the right model. It’s about designing the right process around inherently probabilistic technology.

Have you noticed AI inconsistency in your work? I’d love to hear your experiences and the workarounds you’ve discovered. The more we share, the better we all get at working with these remarkable but imperfect tools.