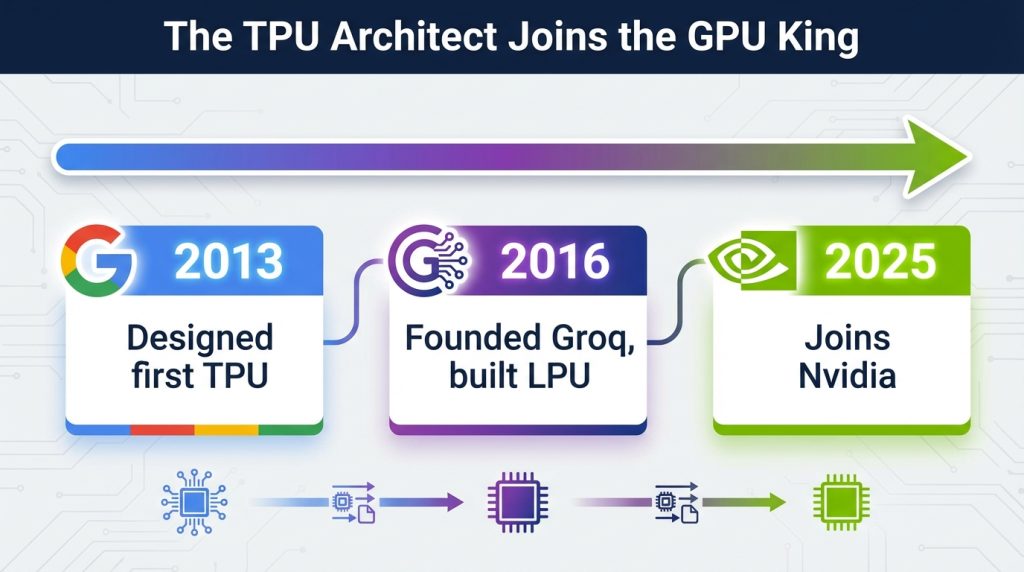

On Christmas Eve 2025, Nvidia quietly announced what could be the most strategically brilliant deal in the AI chip wars: a $20 billion non-exclusive licensing agreement with Groq, the inference chip startup founded by the architect of Google’s first TPU.

Not an acquisition. A licensing deal.

The distinction matters more than you might think.

The Inference Problem Nobody’s Talking About

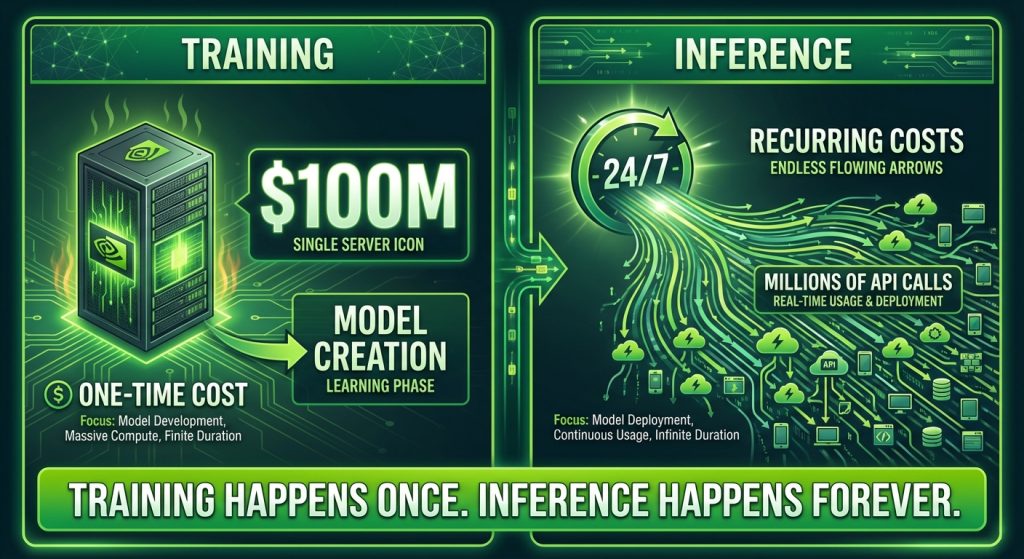

Here’s what most people miss about the AI industry: training a model is a one-time expense. Running it is forever.

Every time you ask ChatGPT a question, every time Midjourney generates an image, every time an AI agent completes a task, that’s inference. And it happens millions of times per day, across millions of users, 24/7.

Nvidia has dominated AI training. Their GPUs are the undisputed champions for building large language models. But inference? That’s a different battlefield entirely, and Nvidia faces real competition there from AMD, custom silicon from hyperscalers, and scrappy startups like Groq and Cerebras.

Groq’s pitch is compelling: their LPU (Language Processing Unit) delivers 10x faster inference than traditional GPUs while using a fraction of the energy. They’ve achieved this by rethinking chip architecture from the ground up, using on-chip SRAM instead of external high-bandwidth memory.

The result? Lightning-fast responses that make chatbot interactions feel instantaneous.

The Talent Play

When Jensen Huang wrote to Nvidia employees about this deal, he was clear: “We are adding talented employees to our ranks and licensing Groq’s IP. We are not acquiring Groq as a company.”

But look at who’s moving to Nvidia:

Jonathan Ross, Groq’s founder and CEO, who designed Google’s first Tensor Processing Unit. Sunny Madra, Groq’s president. And “other members of the engineering team” according to the official announcement.

This is the TPU architect now working for Nvidia. The person who proved that specialized AI chips could challenge GPU dominance is joining the GPU king. That’s not just a technology acquisition. It’s a statement about where the talent believes the future is heading.

The Antitrust Genius

Here’s where Nvidia’s dealmaking gets clever.

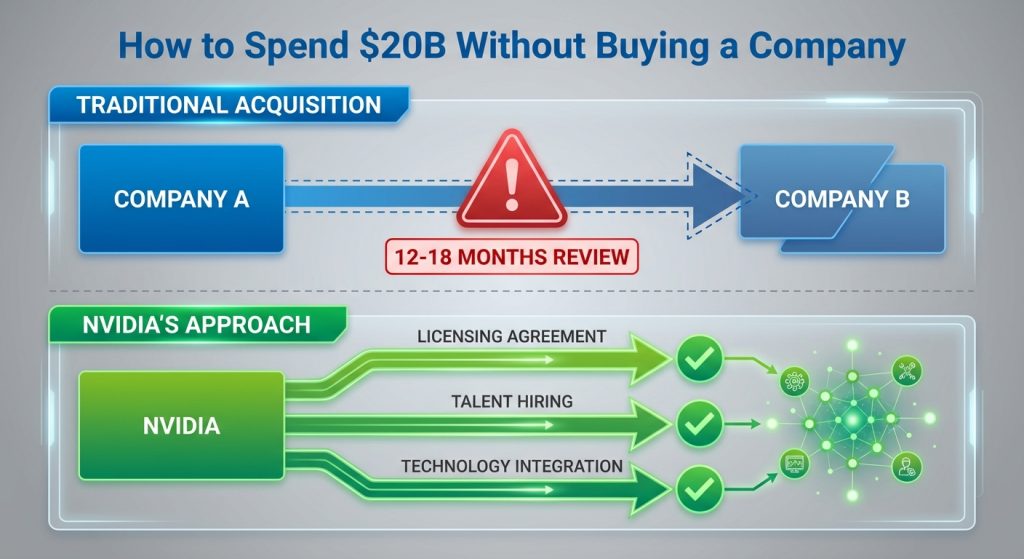

Full acquisitions attract regulators. The FTC and DOJ have been scrutinizing Big Tech M&A with increasing intensity. A $20 billion outright purchase of a competitor would likely face months or years of regulatory review.

But a licensing deal? Much cleaner.

Groq remains an independent company. Simon Edwards takes over as CEO. GroqCloud continues operating. On paper, competition remains intact. Nvidia gets the technology and the talent, but technically hasn’t acquired anyone.

This playbook is becoming standard practice in AI. Microsoft brought in its top AI executive through a $650 million “licensing fee” arrangement. Meta spent $15 billion to hire Scale AI’s CEO without buying the company. Amazon hired away Adept AI’s founders through similar structures.

Nvidia tried this approach earlier in 2025, paying over $900 million for Enfabrica’s talent and technology through a licensing arrangement. The Groq deal is the same strategy, but at 20x the scale.

Analysts are already noting the pattern. One Bernstein analyst observed that structuring the deal as a non-exclusive license “may keep the fiction of competition alive” even as Groq’s leadership and technical talent move to Nvidia.

What This Means for the AI Industry

Nvidia is positioning itself to dominate both sides of the AI compute equation.

For training, they already control an estimated 80%+ of the market. Their H100 and Blackwell chips are the gold standard for building large language models.

Now, with Groq’s inference technology integrated into what Huang calls “the NVIDIA AI factory architecture,” they’re making a serious play for the inference market too.

The economics matter here. Training a frontier model might cost $100 million or more, but it’s a one-time expense per model. Inference costs scale with usage and never stop. As AI moves from research labs into production applications, inference becomes the larger market.

Nvidia just bought its way into that market without technically buying anything.

The Bigger Picture

We’re entering what Nvidia clearly sees as “the age of inference.”

Every AI agent, every chatbot, every generated image, every automated workflow runs on inference. And the companies that control inference infrastructure will control the economics of AI deployment.

Nvidia’s move suggests they see the same future: a world where AI models are commoditized, but the chips that run them are not. Where training happens once, but inference happens forever. Where speed and efficiency matter more than raw training power.

The $20 billion question is whether this non-exclusive license is really non-exclusive in practice. Groq “remains independent,” but its best people are leaving. Its core technology is now available to Nvidia. Its cloud business continues, but now competes against a much more powerful Nvidia inference offering.

Sometimes the smartest acquisition isn’t an acquisition at all.

What do you think about Nvidia’s approach to the inference market? Is the licensing deal structure a clever regulatory workaround, or genuine strategic innovation? The AI chip wars are just getting started.